MiniGPT-4: A Leap Forward in Vision-Language Understanding with Advanced Large Language Models

MiniGPT-4: A Leap Forward in Vision-Language Understanding with Advanced Large Language Models, deepleaps.comThe cutting-edge GPT-4 has recently demonstrated extraordinary multi-modal abilities, including generating websites directly from handwritten text and identifying humorous elements within images. These features are rarely observed in previous vision-language models. The primary reason for GPT-4’s advanced multi-modal generation capabilities lies in the utilization of a more advanced large language model (LLM). To further explore this phenomenon, a team of researchers led by Deyao Zhu and Jun Chen have introduced MiniGPT-4, which aligns a frozen visual encoder with a frozen LLM, Vicuna, using just one projection layer.

Research Findings and Emerging Capabilities

The team’s findings reveal that MiniGPT-4 processes many capabilities similar to those exhibited by GPT-4, such as detailed image description generation and website creation from hand-written drafts. Furthermore, they also observed other emerging capabilities in MiniGPT-4, including writing stories and poems inspired by given images, providing solutions to problems shown in images, teaching users how to cook based on food photos, and more. These advanced capabilities can be attributed to the use of a more advanced large language model. The method is also computationally efficient, requiring only the training of a projection layer using roughly 5 million aligned image-text pairs and an additional 3,500 carefully curated high-quality pairs.

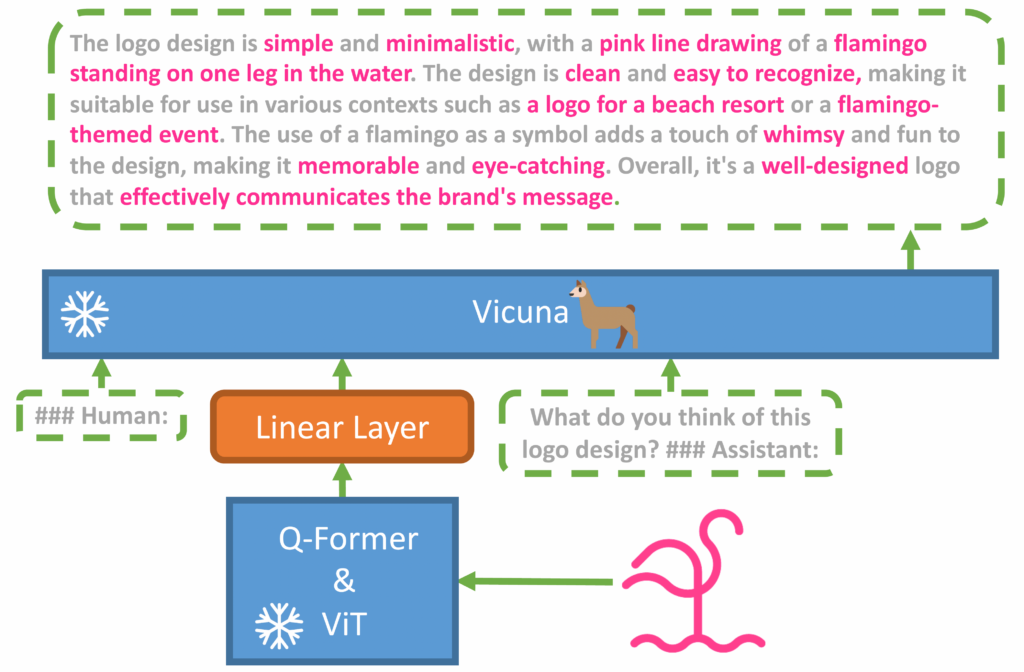

MiniGPT-4 Model Architecture

MiniGPT-4 consists of a vision encoder with a pretrained ViT and Q-Former, a single linear projection layer, and an advanced Vicuna large language model. To align the visual features with the Vicuna, the team only needed to train the linear layer.

Two-Stage Training Approach

The innovative two-stage training approach involved a traditional pretraining stage and a finetuning stage. The first stage was trained using roughly 5 million aligned image-text pairs in just 10 hours using four A100s. After the first stage, Vicuna was able to understand the image, but its generation ability was heavily impacted.

To address this issue and improve usability, the researchers proposed a novel way to create high-quality image-text pairs using the model itself and ChatGPT together. Based on this, they created a small yet high-quality dataset with 3,500 pairs in total. The second finetuning stage was trained on this dataset in a conversation template to significantly improve generation reliability and overall usability. To the researchers’ surprise, this stage was computationally efficient and took only around 7 minutes with a single A100.

Installation and Preparation

The team provided a comprehensive guide on installing and preparing the pretrained Vicuna weights and MiniGPT-4 checkpoint for enthusiasts and researchers. This allows users to try out the demo on their local machines. Instructions include cloning the repository, creating a Python environment, activating it, setting paths for Vicuna weights and pretrained MiniGPT-4 checkpoints, and launching the demo locally.

Training MiniGPT-4

The training of MiniGPT-4 consists of two alignment stages, which are clearly explained in their documentation. The first pretraining stage involves downloading and preparing the datasets from Laion and CC, mapping the visual features, and launching the training. In the second finetuning stage, the team uses a small, high-quality image-text pair dataset created by themselves and converts it to a conversation format to further align MiniGPT-4. The instructions for downloading and preparing the second stage

dataset are also provided. To launch the second stage alignment, users must first specify the path to the checkpoint file trained in stage 1 in the train_configs/minigpt4_stage1_pretrain.yaml. The output path can also be specified in this file. Then, the following command can be run to begin the second stage alignment. In the researchers’ experiments, they used one A100:

torchrun --nproc-per-node NUM_GPU train.py --cfg-path train_configs/minigpt4_stage2_finetune.yaml

After the second stage alignment, MiniGPT-4 is able to coherently discuss images and offer user-friendly interactions.

Implications and Future Prospects

The groundbreaking MiniGPT-4 not only demonstrates the power of large language models in enhancing vision-language understanding and multi-modal generation capabilities but also showcases impressive efficiency in its training process. With its emerging capabilities, MiniGPT-4 is poised to revolutionize the landscape of artificial intelligence, opening up new possibilities for applications that harness the combined power of vision and language understanding.

Potential applications span various domains, such as virtual assistants that can provide cooking guidance based on food photos, educational platforms that generate stories and poems inspired by images, problem-solving tools that offer solutions to challenges depicted in pictures, and web development services that create websites from hand-written drafts.

The team’s innovative approach to creating high-quality image-text pairs using the model itself and ChatGPT together also provides insights for future research and development in AI, particularly in the area of vision-language understanding. As the field continues to advance, we can expect even more powerful and efficient models that will broaden the horizons of AI applications, making them increasingly versatile, intuitive, and accessible.

Check out the demo here: https://minigpt-4.github.io/

Source and instructions: https://github.com/Vision-CAIR/MiniGPT-4

{

"seed": 2003742545,

"used_random_seed": true,

"negative_prompt": "",

"num_outputs": 1,

"num_inference_steps": 100,

"guidance_scale": 7.5,

"width": 512,

"height": 512,

"vram_usage_level": "high",

"sampler_name": "euler",

"use_stable_diffusion_model": "Dreamshaper_3.32_baked_vae_clip_fix",

"use_vae_model": "vae-ft-mse-840000-ema-pruned",

"stream_progress_updates": true,

"stream_image_progress": false,

"show_only_filtered_image": true,

"block_nsfw": false,

"output_format": "jpeg",

"output_quality": 75,

"output_lossless": false,

"metadata_output_format": "json",

"original_prompt": "MiniGPT-4: A Leap Forward in Vision-Language Understanding with Advanced Large Language Models, deepleaps.com",

"active_tags": [

"Beautiful Lighting",

"Detailed Render",

"Intricate Environment",

"CGI",

"Cinematic",

"Dramatic",

"HD"

],

"inactive_tags": [],

"use_upscale": "RealESRGAN_x4plus",

"upscale_amount": "4",

"prompt": "MiniGPT-4: A Leap Forward in Vision-Language Understanding with Advanced Large Language Models, deepleaps.com, Beautiful Lighting, Detailed Render, Intricate Environment, CGI, Cinematic, Dramatic, HD",

"use_cpu": false

}