Abacus.ai Unveils Giraffe Models, Aiming to Advance Context Length Extrapolation in LLMs

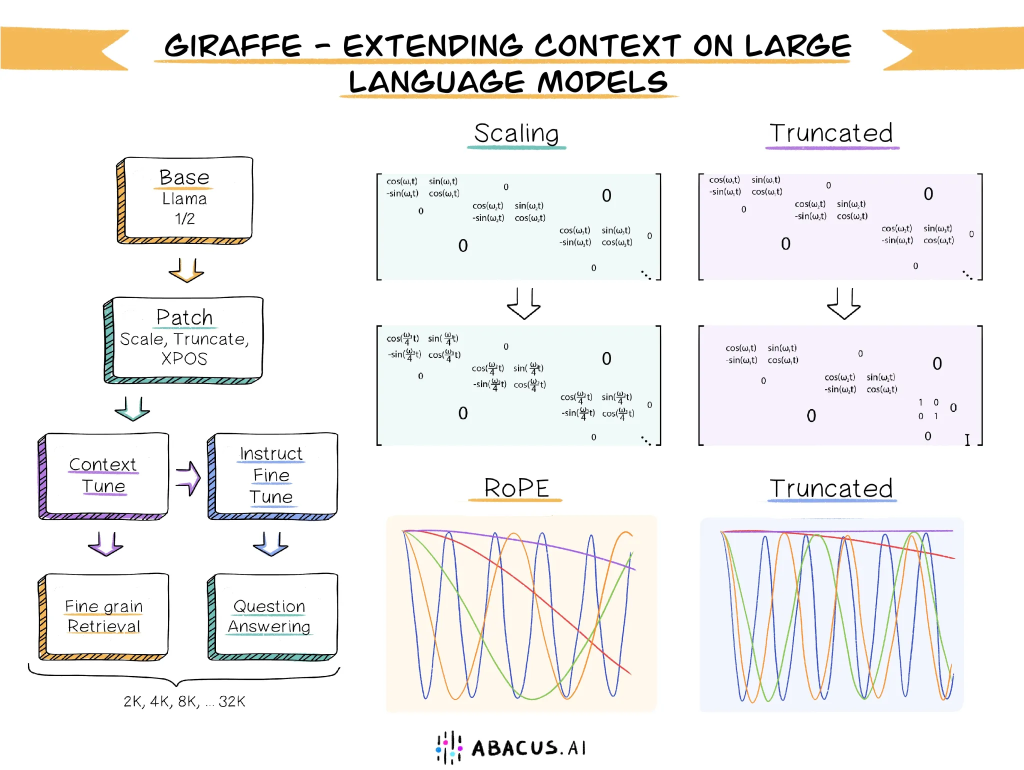

Today, the cutting-edge AI company Abacus.ai released an intriguing paper on arXiv named “Giraffe: Adventures in Expanding Context Lengths in LLMs.” The paper highlights the company’s new family of models called Giraffe, designed to explore the limitations and possibilities of context length extrapolation in Large Language Models (LLMs).

The Giraffe family consists of finetuned models including 4k and 16k variants derived from the LLaMA base, as well as a 32k Giraffe finetuned from LLaMA2. Abacus.ai has graciously released the weights of these models on HuggingFace, along with the corresponding training code, evaluation datasets, and scripts for the research community’s benefit.

Extrapolating Context Lengths in LLMs

The primary focus of the Giraffe project is the context length extrapolation of LLMs. Context length extrapolation pertains to the use of an LLM that has been trained on a short context length for evaluation on longer ones, without additional training. This capacity to extrapolate to longer contexts is vital for a wide array of applications such as information retrieval from large data corpuses, AI-powered chatbot conversations, and code assistance on extensive codebases.

However, training the model on longer contexts is not a straightforward task. A core part of modern LLM architecture, self-attention, scales quadratically in both memory and computation as context length increases, leading to limitations in resources. This dilemma has inspired the development of methods to zero-shot extrapolate to unseen context lengths.

Investigating Approaches and Proposing Solutions

The Giraffe paper assembles the most significant approaches to context length extrapolation and rigorously tests them to determine effectiveness. It also introduces a couple of innovative methods, including one known as truncation, showing promising results.

Part of the challenge in assessing LLM performance lies in the evaluation methodology. Traditionally, next-token perplexity has been used to measure the model’s prediction capabilities. However, the paper shows that perplexity is less adept at distinguishing long context performance between models than newly introduced tasks focusing on model recall accuracy rather than text coherence.

Three novel tasks, LongChat-Lines, FreeFormQA, and AlteredQA, form the bedrock of this new evaluation paradigm. These question-answering datasets, based on Wikipedia, are also released as HuggingFace datasets.

The Path Forward

Despite the exciting developments, there are still many unresolved questions in the realm of context length extrapolation of LLMs. None of the methods studied in the paper fully satisfy the requirement of genuine extrapolation without performance degradation. The team at Abacus.ai is committed to continued research in this domain, aiming to address these challenges.

{

"seed": 963784064,

"used_random_seed": true,

"negative_prompt": "",

"num_outputs": 1,

"num_inference_steps": 75,

"guidance_scale": 7.5,

"width": 512,

"height": 512,

"vram_usage_level": "balanced",

"sampler_name": "euler",

"use_stable_diffusion_model": "hardblend_",

"clip_skip": false,

"tiling": "none",

"use_vae_model": "vae-ft-mse-840000-ema-pruned",

"stream_progress_updates": true,

"stream_image_progress": false,

"show_only_filtered_image": true,

"block_nsfw": false,

"output_format": "jpeg",

"output_quality": 75,

"output_lossless": false,

"metadata_output_format": "json",

"original_prompt": "Abacus.ai Unveils Giraffe Models, Aiming to Advance Context Length Extrapolation in LLMs, deepleaps.com",

"active_tags": [

"CGI",

"Fantasy",

"Photo",

"Realistic",

"Cinematic",

"Dramatic",

"Landscape",

"Excited"

],

"inactive_tags": [],

"use_upscale": "RealESRGAN_x4plus",

"upscale_amount": "4",

"prompt": "Abacus.ai Unveils Giraffe Models, Aiming to Advance Context Length Extrapolation in LLMs, deepleaps.com, CGI, Fantasy, Photo, Realistic, Cinematic, Dramatic, Landscape, Excited",

"use_cpu": false

}